Published 2026-01-19

Imagine this scenario: You have a very complex data product in your hand, such as a monitoring system or an application that needs to process real-time information. At the beginning, everything runs smoothly, the code is neat and the functions are clear. But as the amount of data snowballs and new needs emerge, things start to get a little... tricky.

Do you feel that once a certain core function is adjusted, the entire system must be retested and deployed nervously? Do you feel that the data flow pipeline is getting more and more crowded, like a ring line during peak hours, where a small failure can cause the entire line to be paralyzed? What's even more troublesome is that if you want to try a new technology or expand a certain module, you are restrained because it affects your whole body.

If you are nodding, then you may be encountering the typical "growing pains" of monolithic architecture in data-intensive applications. All functions are tightly coupled together. Although it is simple in the beginning, when it reaches the stage where flexibility and scale are required, it becomes a bulky and fragile behemoth.

At this time, someone will mention a word: microservice architecture. It sounds technical, but the idea is actually quite intuitive – instead of building a giant tanker, it’s better to build a fleet of flexible speedboats.

The traditional data processing method is like a large warehouse where all the tools and production lines are stuffed. Order processing, user analysis, and report generation, all in one place. If you get stuck at one step, you have to wait for everything else.

The idea of microservice architecture is splitting. It establishes independent "specialty workshops" for each core data function. For example, one microservice is only responsible for efficiently receiving and verifying raw data streams, like a dedicated gatekeeper; another microservice is responsible for data cleaning and formatting, and is a meticulous cleaner; and another is immersed in complex analysis and aggregation calculations, like an analyst. They each have independent running environments and databases, and talk to each other through clear, lightweight protocols (such as APIs).

Doing so brings several tangible benefits:

It's resilience. A temporary overhaul or upgrade of a "workshop" (service) will not cause the entire "factory" to stop production. Is there something wrong with the data receiving service? The analysis service can still continue to work based on the existing clean data, and the availability of the entire system is greatly improved.

It's elastic. Suddenly there is a huge influx of data? It doesn't matter, you just need to allocate more computing resources to the microservice responsible for data ingestion (start a few more instances), just like opening a few more cash registers during peak periods, and other services will not be affected at all. This ability to scale on demand is critical to dealing with traffic fluctuations and costs.

Then there's technological freedom. Each microservice can choose the technology stack that best suits its task. Those responsible for real-time stream processing can use a framework that is better at this aspect, while those doing batch historical analysis can use another set of tools. The team does not need to be bound by a single technology and can choose the sharpest "knife" to deal with specific problems.

is the iteration speed. Teams can independently develop, test, and deploy their own microservices without having to coordinate the overall situation. Want data analysis? Just update that analytics service and get it online quickly without worrying about breaking the data collection module.

The concept is wonderful, but for this fleet to truly sail efficiently and collaboratively, it cannot do without reliable basic components. This is like designing a sophisticated automated assembly line, but if the conveyor belt motor is slow to respond and lacks accuracy, the efficiency and stability of the entire system will be greatly reduced.

In the world of microservices, these "motors" and "gears" are the underlying infrastructure and inter-service communication mechanisms. They require extremely high reliability and precise control. For example, ensuring timely and accurate service discovery, ensuring that configuration information can be synchronized to each instance consistently and quickly, or managing the status of distributed scenarios - these details often determine the success or failure of the architecture.

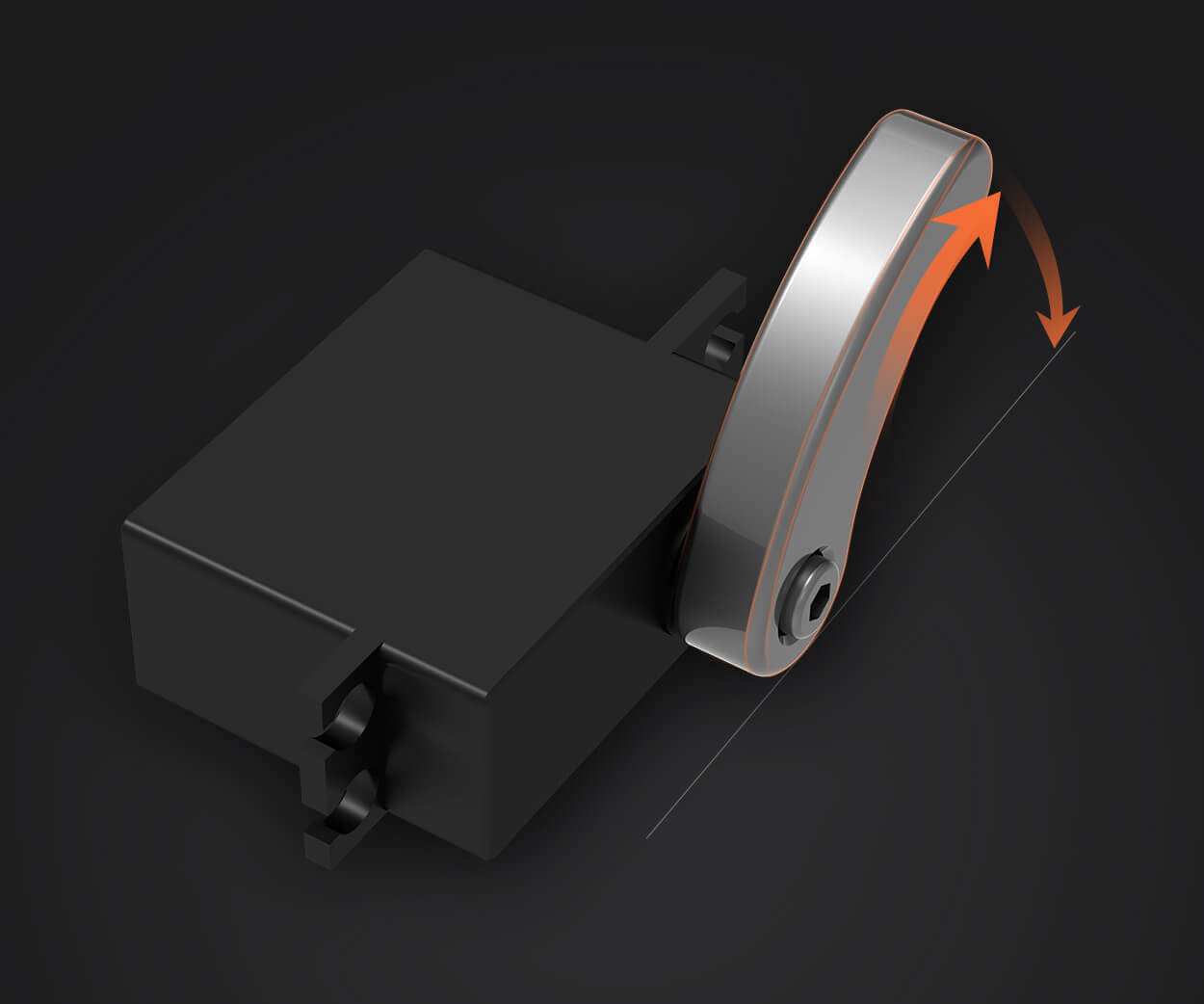

in this regard,kpowerThe core spirit of the field of precision motion and control it focuses on coincides with the demands of microservice architecture. Whether it is a servo motor that requires millisecond response and precise positioning, or a steering gear that requires stable and rapid execution of instructions within a limited angle, precision, reliability, and efficient collaboration are emphasized. This product philosophy of extreme requirements for the underlying execution units is exactly the attitude that should be adopted when building a robust microservice data system: each basic component is accurate in its role, and the entire system can run smoothly.

How to start? This doesn’t require you to start over immediately.

You can start with a pain point. Identify the links in your current data processes that are most unstable, change most often, or have the most obvious performance bottlenecks. Strip it out and design it as your first microservice. For example, first isolate the most time-consuming data conversion task.

A clearly defined “contract”. Make sure the interface (API) between this new service and the rest of the system is simple and clear. Just like setting the input standards of raw materials and the output specifications of finished products for the workshop, this is the cornerstone of their collaborative work.

Build autonomy. Let the service manage its own data (if applicable) and be able to deploy independently. It should be responsible for its own life cycle.

Embrace automation. When the number of microservices increases, manual management will be a nightmare. Toolchains that automate deployment, monitoring, and log collection should be considered from the start.

The process may be like untangling a tangled thread and requires patience in the early stages. But when the first service successfully runs independently and brings visible improvements, you will feel the pleasure of "untying". The flow of data will become more organized, the face of the system will become clearer, and you will gain the confidence to deal with future changes.

In the final analysis, the choice of technical architecture is always to better serve the business and data itself. When data starts to "speak" and drive decisions, you will find that a system built with accurate and independent microservices can make these "voices" more clear and timely. It is no longer a piece of porcelain that needs careful maintenance, but a set of dynamic organisms that can continue to evolve.

This may be another elegant way to deal with data complexity.

Established in 2005,kpowerhas been dedicated to a professional compact motion unit manufacturer, headquartered in Dongguan, Guangdong Province, China. Leveraging innovations in modular drive technology,kpowerintegrates high-performance motors, precision reducers, and multi-protocol control systems to provide efficient and customized smart drive system solutions. Kpower has delivered professional drive system solutions to over 500 enterprise clients globally with products covering various fields such as Smart Home Systems, Automatic Electronics, Robotics, Precision Agriculture, Drones, and Industrial Automation.

Update Time:2026-01-19

Contact Kpower's product specialist to recommend suitable motor or gearbox for your product.