Published 2026-01-19

Imagine that the microservice system you built yourself is like a sophisticated mechanical device, and each service module meshes and operates like gears. Everything went smoothly at first, but as the business traffic increased and decreased, the call chain between services became longer and longer, and a certain link suddenly became "stuck" - as if a spring lost its elasticity after repeated compression, and the overall system response slowed down, and even crashed occasionally. At this time, do you feel that although those services are still alive, they are already a bit "stiff"?

Yes, this is something common in microservice architecture: lack of resilience. The system seems to be distributed, but once it encounters network fluctuations, dependent service delays, or sudden traffic, the entire link can easily become vulnerable. what to do? Many people have begun to add various retry mechanisms and circuit breaker logic to the code. The result is often like forcing a patch on the machine. After fixing it here, it starts to make noise again.

In fact, it is better to embed "elastic components" in the architecture in advance than to remedy it afterwards. This is like adding springs or buffer pads to key connections when designing a mechanical structure - not to replace parts when they break, but to allow the structure itself to absorb vibrations and adapt to fluctuations.

The idea of building resilience in Spring Boot microservices is similar. It is not a bunch of if-else retry code, but a systematic adaptive capability: allowing services to maintain functionality under stress, recover quickly from failures, and remain reliable in uncertain environments.

How exactly is this “elasticity” built? Many people will immediately think of fuses, current limiting, degradation... These words sound very technical, but the core logic is actually very intuitive: giving the system a kind of "contingency intelligence". For example, when a downstream service responds slowly, can the system automatically temporarily bypass it and return cached data or default values first? When traffic suddenly surges, can requests be smoothly limited to avoid avalanches?

Traditional methods often require the introduction of multiple external libraries and the configuration of complex policy rules, making management like maintaining another system. Is there a simpler way? Just like in a mechanical device, if you choose a high-quality spring, its material and craftsmanship determine the buffering effect. At the code level, you can also use some lightweight toughness modules to encapsulate elastic logic into simple configurable components.

For example, you can embed a resiliency policy in the client that calls between services: when three consecutive calls time out, it automatically switches to degradation logic; when the error rate exceeds the threshold, the request is temporarily stopped and tested again after a period of time. These behaviors do not require you to manually write a bunch of status judgments. Instead, you can configure the trigger conditions and response actions just like setting mechanical parameters.

What are the benefits of this approach? The system becomes more "live". It no longer rigidly executes every call, but can fine-tune its behavior based on environmental feedback. Even if external conditions are unstable, core business links can remain open. This is like adding a layer of "intelligent buffering" to each service module. Pressure is absorbed and faults are avoided. The whole process is almost imperceptible.

There are many tools on the market that provide resilient components, but many solutions are too complex or require changes in your coding habits.kpowerThe idea is different - it is more like providing a set of "resilient accessories" for your existing Spring Boot application, directly embedded into the existing call link, with almost no change to your architectural style.

You can understand it this way: If your microservice is a sophisticated steering gear control system, thenkpowerThe only components are those small dampers and elastic couplings. They do not replace the core motor, nor do they rewrite the control logic. They just exist quietly in the transmission link to reduce impact, compensate for deviations, and improve the stability of the overall movement.

What's it like to actually use it? The configuration is simple, and in most cases only a few lines of configuration items are needed; the code intrusion is minimal, and there is no need to rewrite the business logic; the effect is visible, and the monitoring indicators can clearly show the number of circuit breakers or downgrades triggered and the recovery speed. This makes maintenance intuitive - instead of debugging a bunch of random failures that are hard to reproduce, you're watching how the system automatically responds to various fluctuations.

Question: Will adding these elastic components increase the system complexity?

Just like in mechanical design, adding a buffer link may result in one more part, but if designed properly, it can actually reduce overall wear. The management logic of resilience modules is convergent - they usually have a unified configuration entry and monitoring interface and are not scattered throughout the code. Once set up, they work automatically and require no daily intervention.

Q: If I don’t want to use circuit breaker and just want to downgrade, can I choose flexibly?

certainly. Flexible design is not a “fit-it-all”, but rather like different tools in a toolbox. You can choose to enable only timeout control or only set a degradation policy based on the importance of the service and the strength of its dependencies. You can even configure different rules for different interfaces to finely control the resilience of each link.

In fact, the choice of technical solutions is often a matter of feeling. When you look at the monitoring chart, even if the downstream service occasionally jitters, your core indicators are still as smooth as a straight line; when you find that the traffic is low at night, the system automatically shrinks resources and warms up in advance before the daytime peak - it feels like you know that there is enough safety margin in the mechanical device, and it will run steadily even if it encounters bumps.

Building microservice resilience is not to cope with disasters, but to make daily life smoother. It allows the system to move from "reluctantly running" to "easily adapting". And all of this can start from a lightweight, focused component, and slowly let resilience penetrate into the blood of the architecture.

Good elastic design makes technology invisible and allows business to continue. This may be why more and more people are beginning to think of resilience not as a "failure recovery solution" but as the default configuration of microservices architecture. After all, who doesn’t want their system to be flexible and stable at the same time?

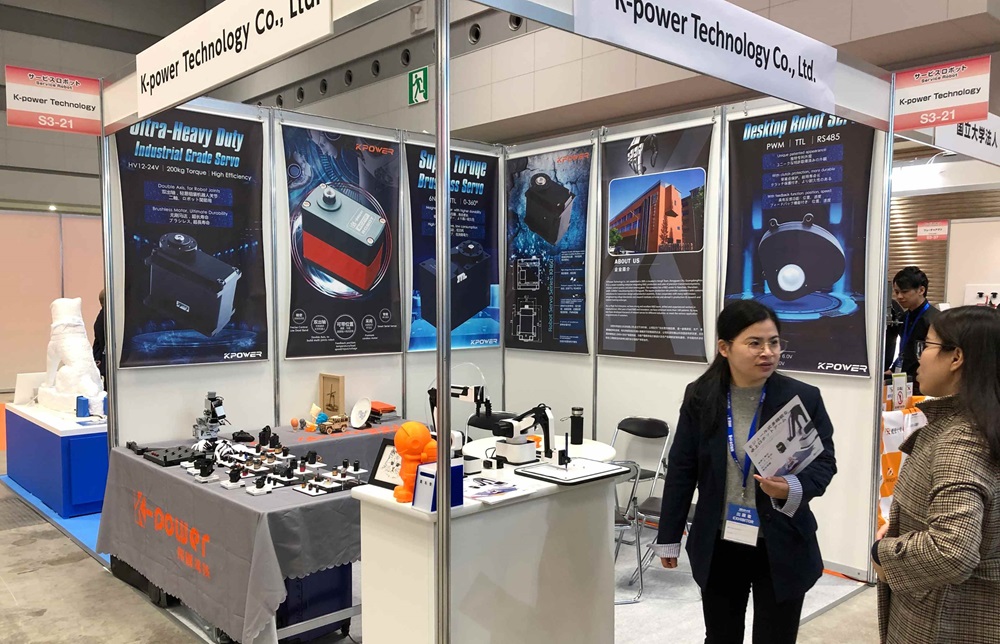

Established in 2005, Kpower has been dedicated to a professional compact motion unit manufacturer, headquartered in Dongguan, Guangdong Province, China. Leveraging innovations in modular drive technology, Kpower integrates high-performance motors, precision reducers, and multi-protocol control systems to provide efficient and customized smart drive system solutions. Kpower has delivered professional drive system solutions to over 500 enterprise clients globally with products covering various fields such as Smart Home Systems, Automatic Electronics, Robotics, Precision Agriculture, Drones, and Industrial Automation.

Update Time:2026-01-19

Contact Kpower's product specialist to recommend suitable motor or gearbox for your product.