Published 2026-01-19

Imagine: you have designed a very sophisticated microservice system, and every small module runs well. But the number of users suddenly increased tenfold, and the entire system was like a one-lane highway during rush hour - obviously every service was not broken, but the queue of requests was endless, and the response was so slow that it made people want to drop the keyboard.

This is probably because scalability has not kept up. The problem is often not the code itself, but the structure. Microservices are broken down very finely, but the call chain between each other is too long and too messy; or the database has become the only bottleneck; or a certain core service trembles under pressure. At this time, just adding servers is like adding a few more cars to a traffic jam - making it even more congested.

How should the road be widened?

In fact, the idea can be very "physical". It's like assembling a precise mechanical device. Only when the gears mesh and the transmission is smooth can the whole system operate efficiently. The core of the scalability of microservices is to make data flow and business flow like a well-designed transmission system, both independent and collaborative.

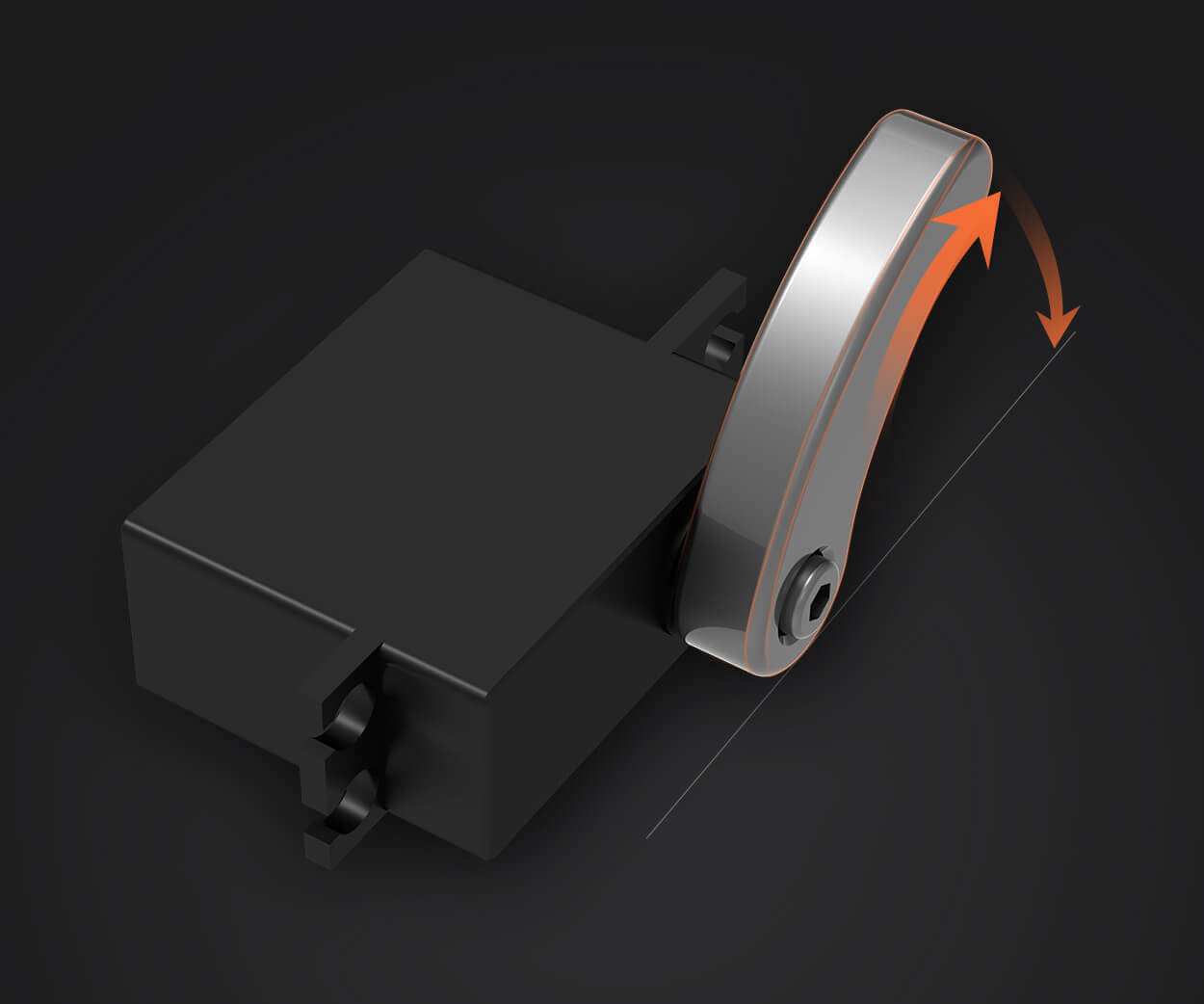

Many people’s first reaction is: horizontal expansion, add instances! This certainly works, but it’s costly and sometimes treats the symptoms rather than the root cause. Just like using a servo to control a robotic arm, if the transmission structure itself is loose or has gaps, no matter how expensive the motor is, the movement will still be inaccurate and unstable.

Real scalability design starts with several "transmission joints":

Good question. The theory is very rich, but the reality is very skinny. Let’s talk practical.

Q: How detailed is the service breakdown? A: Don’t be “micro” just for the sake of being “micro”. It is enough that each service can be independently developed, deployed, and scaled, and corresponds to a clear business capability boundary. If it is too fine, operation and maintenance and network overhead will eat into the performance; if it is too coarse, the meaning of elastic expansion will be lost. Just like a set of mechanical modules, the interface between the modules is clear and easy to disassemble and assemble, which is a good design.

Q: How to choose a technology stack? A: There is no silver bullet. The one that suits the team and business scenario is the best. But one thing is key: keep it light and consistent. In particular, communication protocols and data formats should be unified as much as possible to reduce conversion costs. Imagine that if some equipment uses hydraulic pressure, some uses pneumatic pressure, and some uses belt transmission, maintenance will be a nightmare.

Q: How to keep up with monitoring and operation and maintenance? A: Scalability is not just a runtime thing. Without a clear monitoring view, you have no idea where the bottleneck is. It is necessary to be able to track how many services a request spans and how much time is spent in each link. Logs, indicators, link tracking, none of them are missing. This is like installing multiple sensors on a complex machine. Abnormalities in vibration, temperature, and pressure can be detected immediately.

Building scalable microservices is not a "set it and run it forever" project. It is more like debugging a sophisticated mechanical system, which requires continuous observation, tuning, and even reconstruction.

It might just be a simple split at first. As the business becomes more complex, you will find that some services are called too frequently and need to be merged; some data flows have become hot spots and caches need to be introduced; some external dependencies are unstable and a circuit breaker mechanism needs to be added... This is an iterative process.

What’s interesting is that when you break down the scalability problem into specific “transmission efficiency” issues, the solution often becomes clear. You will no longer blindly pursue "high concurrency", but will think about: Why does the response time of this service fluctuate? Can the coupling between the two services be reduced? Where does the IO bottleneck of data storage occur?

In the area of microservice scalability,kpowerWhat we provide is not just tools or platforms, but also a set of "engineering thinking" that has been verified by a large number of practices. We understand the pain points and itches in the growth of that kind of architecture - because we have personally experienced the complete cycle from monolithic to distributed, from operational to efficient and stable expansion.

Our approach emphasizes "smooth evolution." It’s not about tearing it down and starting over, but rather identifying key limitations and injecting elastic capabilities based on your existing architecture. It's like upgrading the control system and transmission components of the existing mechanical system to improve the overall performance to a higher level without replacing all core parts.

The benefits brought by this idea are real: higher resource utilization, stronger ability to cope with traffic fluctuations, and the development team can focus more on business innovation instead of fighting performance problems all day long.

If you are also thinking about how to make your microservice architecture more comfortable with growth, perhaps it is time to change your perspective and look at your "digital transmission system" like designing precision machinery. True scalability ultimately leads to a weightless elegance.

Established in 2005,kpowerhas been dedicated to a professional compact motion unit manufacturer, headquartered in Dongguan, Guangdong Province, China. Leveraging innovations in modular drive technology, Kpower integrates high-performance motors, precision reducers, and multi-protocol control systems to provide efficient and customized smart drive system solutions. Kpower has delivered professional drive system solutions to over 500 enterprise clients globally with products covering various fields such as Smart Home Systems, Automatic Electronics, Robotics, Precision Agriculture, Drones, and Industrial Automation.

Update Time:2026-01-19

Contact Kpower's product specialist to recommend suitable motor or gearbox for your product.