Published 2026-01-19

Imagine that you are carefully debugging a complex robotic arm, with the servos at each joint responding to precise instructions. Suddenly, the response of a certain joint was half a beat slow - it was not a problem with the motor itself, but because the signal processing was blocked in a certain link. The whole movement became hesitant and inconsistent. In the digital world, a system composed of many microservices is like this sophisticated machine, and "cache" is the key gear that can smooth the signal and eliminate lag.

You may have heard too many discussions about how microservice architecture can improve flexibility, but few people talk about the "undercurrents" that quietly appear when the number of services increases: the database is overwhelmed by frequent queries, repeated data calculations consume precious resources, and users occasionally experience long and short waiting times. This is not like a piece of hardware that is completely broken, but more like a performance "wear and tear".

The question arises: How can we inject a smooth "memory" into these decentralized and independently operating service units?

In the context of microservices, caching is far from a simple storage middle layer. You can think of it as the "instantaneous working memory" of each service. When a service needs to get data—such as user profiles, product catalogs, or real-time configurations—it doesn't have to trek to the database door every time. If the data has just been used and will not change for a period of time, the cache can deliver the copy immediately.

This brings several immediate benefits:

However, introducing caching is not simply adding a component. It's a bit like choosing lubricant for a delicate steering gear system - using the wrong type or method may cause blockage or contamination.

I know what you are thinking: "It sounds good, but how to do it specifically? Will it make the system more complicated?" Let's jump out of the purely theoretical framework and talk about a few key choices and design ideas.

First, what to cache? Not all data is worth caching. Data with high frequency access, infrequent changes, and high computational cost are preferred. Imagine you manage a real-time quotation system. The underlying product information and exchange rates may only change hourly. These are the best candidates for caching. And those user balance data that are updated with every transaction are obviously not suitable.

Second, where to put it? The cache can be placed inside each service (local cache), which is fast but the data cannot be shared; it can also be used as an independent middleware (distributed cache) for access by all services, which has better consistency but increases network overhead. It's like choosing between centralized lubrication or spot lubrication for a mechanical system, it depends on your trade-off between consistency and performance.

Third, how is the data updated and invalidated? This is the core challenge. The most common strategy is to set a survival time, and when the time is up, it will automatically expire and be pulled again. A more refined approach is to actively clean or update the relevant cache when the source data is updated. This requires some design, but ensures that the information users see is always fresh.

"Will this result in inconsistent data seen by different services?" This is a good question. In a distributed environment, the pursuit of strong consistency sometimes sacrifices availability. A pragmatic approach is to accept short-lived "eventual consistency" depending on the business scenario. For example, if a user updates their profile picture, it may take a few seconds for it to be fully synchronized across all nodes around the world, which is usually acceptable.

In many of the projects we work on involving servo control and automation integration, the flow of data between software and hardware is like a neural signal. The introduction of caching strategy is never an isolated software decision. It affects the entire chain from load balancing to failure recovery.

We prefer to think of caching as a design that improves the "economics" of the system. It uses some memory resources in exchange for valuable computing time and more stable response capabilities. This trade-off is like choosing more suitable bearings for high-speed moving mechanical parts - what reduces invisible friction losses is what improves the overall operating life and efficiency.

Implementing caching is not a "set it and forget it" step. It requires observation and tuning. You might cache too much or too little initially, and set the expiration time too long or too short. But by monitoring the cache hit rate and the overall system latency, you will gradually find the sweet spot that best suits your business rhythm.

Ultimately, when caching is properly integrated into your microservices architecture, it feels like aligning all the gears of a complex machine—it doesn't eliminate all friction, but makes friction controllable and predictable, allowing the entire system to run in an easier, more durable way. You will find that service expansion becomes easier because the database is no longer the link most prone to collapse; the user experience becomes smoother because waiting is no longer the norm.

This is perhaps the most desirable state to pursue in technical design: hiding complexity behind the scenes and leaving simplicity and reliability to the end users. When every service call can be responded to easily, the entire system will have a natural rhythm like breathing.

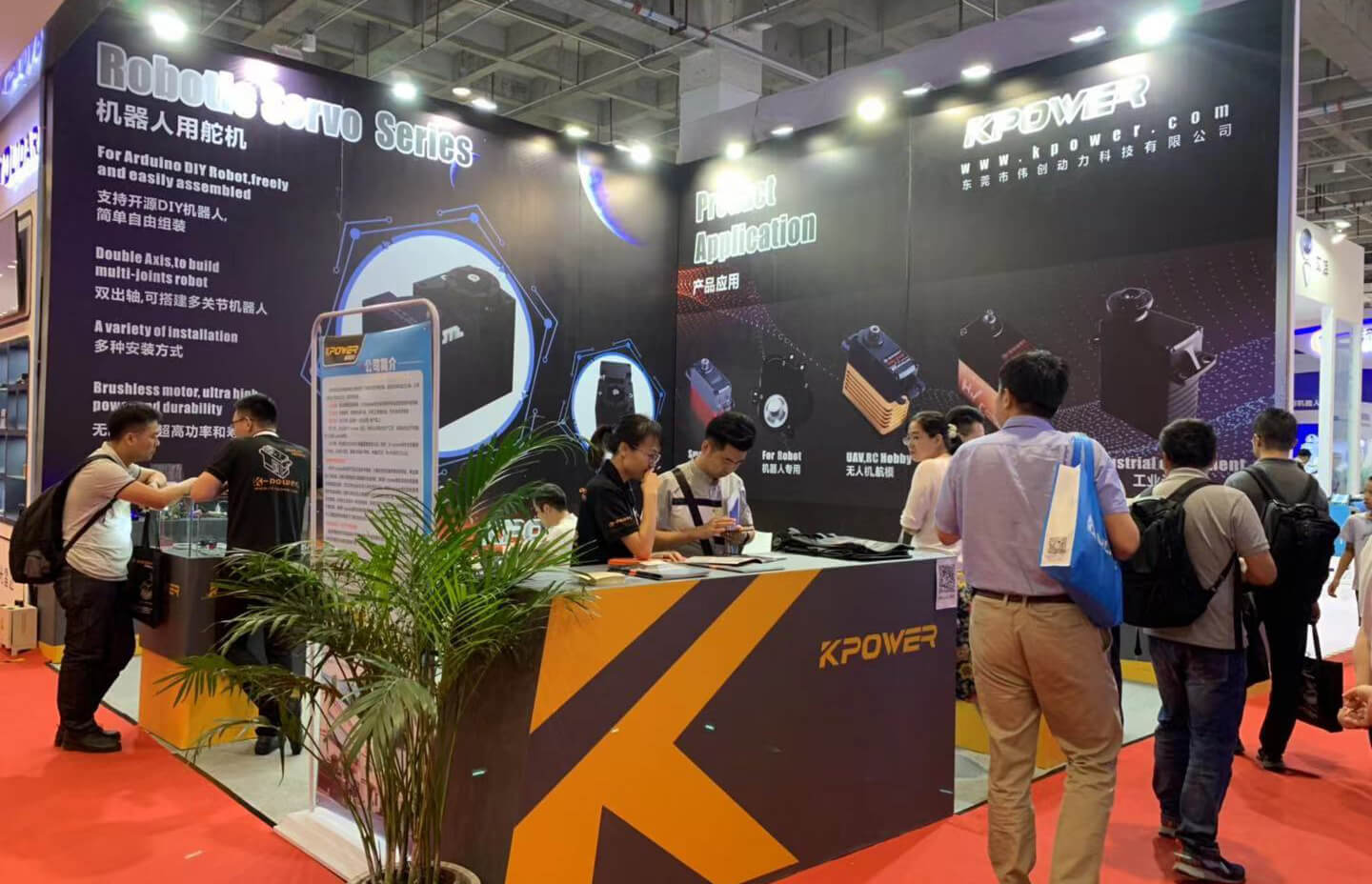

Established in 2005,kpowerhas been dedicated to a professional compact motion unit manufacturer, headquartered in Dongguan, Guangdong Province, China. Leveraging innovations in modular drive technology,kpowerintegrates high-performance motors, precision reducers, and multi-protocol control systems to provide efficient and customized smart drive system solutions. Kpower has delivered professional drive system solutions to over 500 enterprise clients globally with products covering various fields such as Smart Home Systems, Automatic Electronics, Robotics, Precision Agriculture, Drones, and Industrial Automation.

Update Time:2026-01-19

Contact Kpower's product specialist to recommend suitable motor or gearbox for your product.