Published 2026-01-19

You’ve built this sleek system with Spring Boot microservices. Everything’s modular, independent, scalable. It feels clean. Then, reality hits. Maybe it’s a product catalog that gets hammered every morning, or a user profile service that’s constantly being pinged. Responses begin to slow. You see the latency numbers creep up, and the database starts grumbling under the load. That smooth, fast experience you designed? It’s getting clogged. It’s not a breakdown, just a gradual, frustrating drag.

That’s the silent problem many face. The very architecture that brings freedom can introduce friction. Each service talking to the database, over and over, for the same old data. It’s like having a brilliant team where everyone runs to the same file cabinet for every single question, instead of just remembering the answer.

So, what do you do? You introduce a good memory. You cache.

Caching isn’t about fancy tech. It’s a simple principle: keep a copy of frequently needed data closer to where it’s used. For a Spring Boot microservice, it means that instead of always going to the main database, the service can first check its own quick-access storage—its cache. If the data is there, it’s a lightning-fast response. If not, it fetches from the database, stores a copy, and then serves it.

Think of it like a coffee shop barista. On a busy morning, they don’t grind beans for every single order. They brew a batch and keep it ready. When someone asks for a black coffee, it’s poured in seconds. Only for a special order, like a caramel oat milk latte, do they start from scratch. Caching works the same way. Handle the common stuff instantly, save the heavy lifting for the unique requests.

But here’s where it gets interesting in a microservices world. It’s not just one barista; it’s a whole team. How do you make sure they all have the right information? If one service updates a user’s email, how do the others know their cached copy is now stale? This is the real puzzle.

Okay, so caching sounds good. But how do you actually weave it into your services without creating a tangled mess?

First, you choose what to remember. Not everything deserves a spot in the cache. Look for data that’s read often but changes rarely. Product details, country lists, static configuration—these are classic candidates. Data that’s unique every time, or super-sensitive, might stay out.

Then, you pick your tool. Spring Boot makes the first steps almost casual. With a few annotations like @Cacheable, you can tell a method to store its results. The next time the method is called with the same parameters, it serves the cached data. It feels like magic, but it’s just clever design.

The real trick is in the strategy. How long does a piece of data live in the cache? When it expires, does it just vanish, or is it updated? This is where you move from basic setup to true craftsmanship. A time-to-live (TTL) policy is a good start—data auto-refreshes every few minutes. For more consistency, you might use a cache-aside pattern: the application manages the cache, writing to it and invalidating entries when data changes.

And because we’re talking microservices, you often need a cache that lives separately from each service—a distributed cache. This acts as a shared, fast memory for all your services. It’s a central hub for common data, keeping everyone on the same page.

The most obvious win is speed. Latency drops. Your applications feel snappier. But the rewards go deeper.

Your main database gets to breathe. It handles fewer repetitive queries, which means it can focus its power on the complex transactions and writes that truly matter. This can delay or even eliminate costly database upgrades.

Then there’s resilience. If the database has a brief hiccup, for some non-critical data, the cache can still serve requests. It’s not a full backup, but it’s a cushion that keeps the user experience from completely crumbling during a minor glitch.

It also makes scaling smoother. As traffic spikes, your caching layer can absorb a huge amount of read traffic, letting your core systems scale at a more manageable pace.

But let’s pause for a question you might not voice aloud: Doesn’t this add complexity? It can. If not done thoughtfully, you can end up with stale data causing weird bugs, or a cache that eats up too much memory. The goal isn’t to cache everything—it’s to cache wisely. It’s about finding those specific pain points in your service interactions and applying the fix precisely there.

Imagine a simple “Product Info” service. Every time a product page loads, it calls this service. Without caching, every click hits the database. You add a caching layer. Now, the first request for product XYZ fetches from the database and stores the result. For the next hour (or whatever TTL you set), every request for product XYZ gets the data from the cache instantly.

What happens when the product price changes? Your service that updates the price must also clear that specific product’s entry from the cache. The next request will be a “cache miss,” fetching the fresh price from the database and repopulating the cache. This ensures users see the right price without overloading the system.

This dance—between cache and source, between speed and freshness—is the heart of it. It’s not a “set and forget” component. It’s an active part of your data flow.

Starting doesn’t require a grand overhaul. Pick one service, one endpoint that’s clearly overloaded with repetitive reads. Implement a simple cache. Measure the difference. See how your database load lightens and how response times improve.

The tools and patterns are out there, waiting to be applied. The key is to begin with intention, to solve a specific drag, and to understand that caching is less about a technology switch and more about designing a more considerate, efficient flow of information.

It turns a system that works into one that responds. And in the end, that’s what people notice—not the architecture, but the experience. A seamless, quick experience that feels effortless. That’s the quiet power of remembering what matters, right when it’s needed.

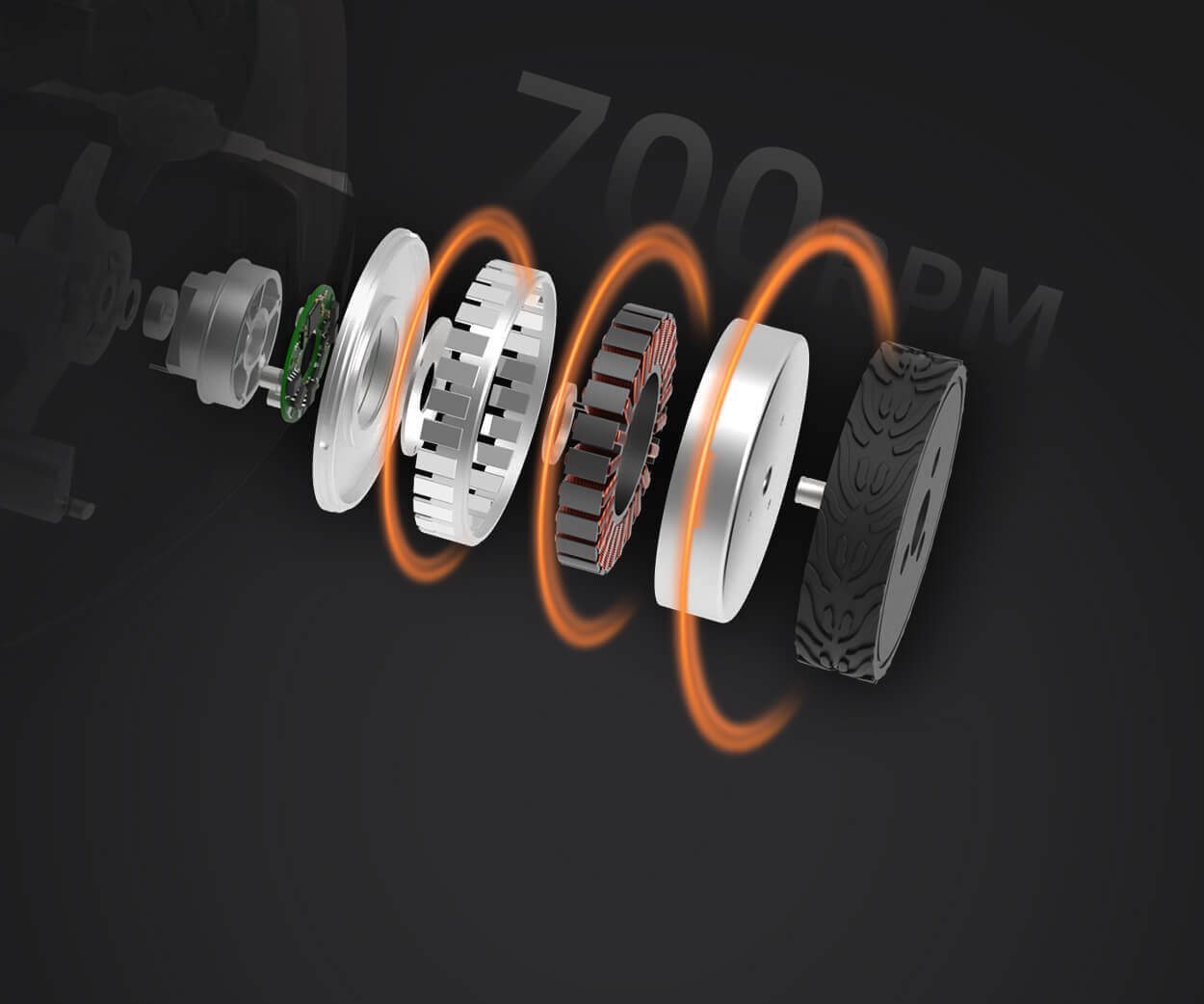

Established in 2005,kpowerhas been dedicated to a professional compact motion unit manufacturer, headquartered in Dongguan, Guangdong Province, China. Leveraging innovations in modular drive technology,kpowerintegrates high-performance motors, precision reducers, and multi-protocol control systems to provide efficient and customized smart drive system solutions.kpowerhas delivered professional drive system solutions to over 500 enterprise clients globally with products covering various fields such as Smart Home Systems, Automatic Electronics, Robotics, Precision Agriculture, Drones, and Industrial Automation.

Update Time:2026-01-19

Contact Kpower's product specialist to recommend suitable motor or gearbox for your product.