Published 2026-01-19

So, you’ve got a system running on Azure, things are buzzing, services are talking — but then traffic spikes hit. Maybe it’s a seasonal rush, maybe a surprise surge. Suddenly, your microservices start stumbling over each other. Response times lag, one troubled service drags others down, and scaling feels like guesswork. Sounds familiar?

That’s where the game changes. Imagine traffic flowing smoothly, no bottle necks, no overwhelmed instances. That’s what a thoughtful load balancing setup does—it becomes the quiet conductor of your microservices orchestra, ensuring every section plays in harmony.

Now, how do we get there? Let’s walk through the mindset, not just the manual.

Start with the "Why" Behind Balance

It’s not just about distributing requests. It’s about resilience. When one service tier gets busy, a smart load balancer redirects traffic to healthier nodes—almost like redirecting crowd flow in a stadium before a bottleneck forms. In Azure’s world, you’re working with scale sets, containers, app services. The load balancer sits in front, making real-time decisions.

Think of it as your system’s innate reflex. It checks health probes, monitors latency, and adapts before users notice a glitch. Without it, scaling out feels like adding more cars to a traffic jam. With it, you add lanes and smart signals.

Choosing Your Balancing Act

Azure offers paths—Application Gateway for layer 7 finesse, Load Balancer for high-throughput TCP/UDP, Front Door for global routing. Which to pick? It comes down to what your services need most.

Is your app HTTP-heavy, needing path-based routing or SSL termination? Gateway might be your backstage manager. Need raw speed, handling millions of database or gaming connections? Load Balancer keeps it simple and sturdy. Spanning regions? Front Door helps direct traffic from Tokyo to Toronto seamlessly.

But here’s a thought—why not combine strengths? Many run Gateway for web-facing apps, with Load Balancer internally between microservice tiers. Layered, like security and traffic flow working in tandem.

ThekpowerApproach: Precision in Motion

Atkpower, we see load balancing like tuning a precision drivetrain. Every component—whether aservoin automation or a cloud service—has its load curve and tolerance. Our focus is matching Azure’s tools to your architecture’s real rhythm, not just following a generic blueprint.

For example, one client ran a bursty e-commerce backend. During sales, their checkout service buckled. We implemented zone-redundant Load Balancer with autoscaling rules tuned to transaction latency, not just CPU. The result? Smooth surges, no dropped carts.

Another case: a telemetry processing pipeline used asynchronous messaging between services. Here, we balanced not just HTTP but also TCP streams, ensuring event queues stayed fluid even during ingest peaks.

It’s that attention to how services interact—not just that they’re connected—that keeps systems reliable.

A Practical Glimpse: Setting the Stage

Let’s sketch a common scenario. Say you have a product catalog service and a recommendation engine, both deployed across multiple Azure VMs. Without balancing, requests might pile up on one VM while others sit idle.

Enable a load balancer. Add health probes that check each service endpoint. Configure rules—maybe port 80 for web, port 5000 for internal API. Now traffic spreads evenly. If a VM stalls, probes detect it and the balancer stops sending requests until it’s back. Simple, yet transformative.

But the real magic happens when you pair this with autoscaling. Set metrics around response time or queue depth. When latency rises, new instances spin up automatically—and the load balancer seamlessly incorporates them. It’s a self-healing cycle.

Why It Feels Like a Relief

Ever managed a system where you’re constantly firefighting? Balancing load shifts that mindset. You’re not just patching—you’re preventing. Services handle faults gracefully. Updates can roll out without downtime. Users get consistent speed.

And for teams, it brings clarity. Monitoring load balancer metrics gives a true picture of demand patterns, not just server utilization. You start designing for resilience from the start.

Wrapping It Together

Getting microservices to cooperate under pressure isn’t just a technical step—it’s a design philosophy. With Azure’s built-in tools and a tailored approach, load balancing becomes less of a complexity and more of a natural reflex in your architecture.

It’s about making sure that when things get busy, your system doesn’t just cope—it performs. And that’s where thoughtful engineering shines. Whether you’re early in your cloud journey or refining a mature setup, that balance is what keeps everything moving smoothly, quietly, reliably.

kpower’s expertise lies in blending these technical pieces into a coherent, resilient whole—so your services aren’t just running, they’re thriving.

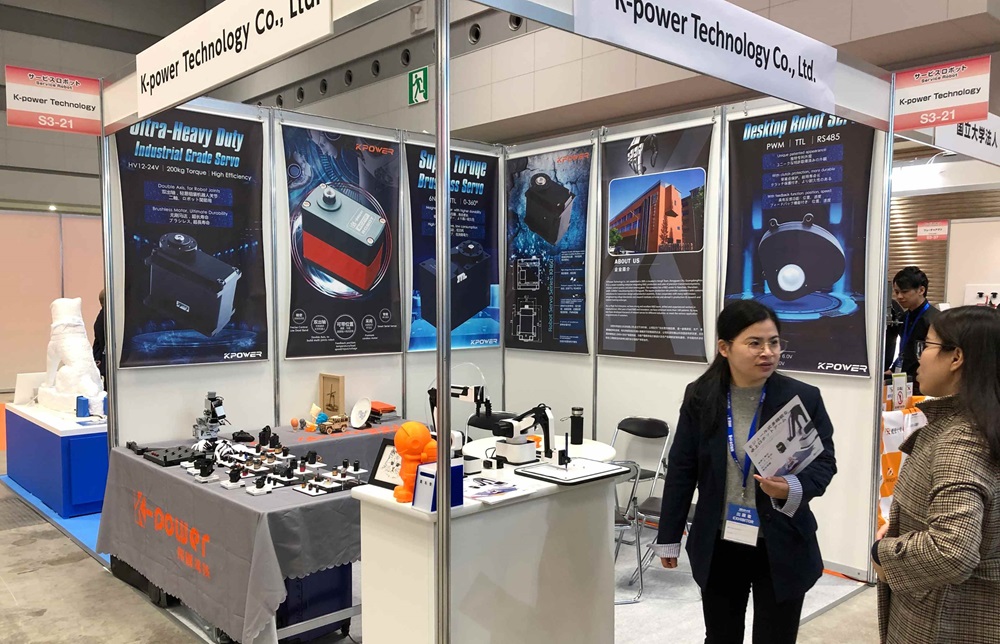

Established in 2005, Kpower has been dedicated to a professional compact motion unit manufacturer, headquartered in Dongguan, Guangdong Province, China. Leveraging innovations in modular drive technology, Kpower integrates high-performance motors, precision reducers, and multi-protocol control systems to provide efficient and customized smart drive system solutions. Kpower has delivered professional drive system solutions to over 500 enterprise clients globally with products covering various fields such as Smart Home Systems, Automatic Electronics, Robotics, Precision Agriculture, Drones, and Industrial Automation.

Update Time:2026-01-19

Contact Kpower's product specialist to recommend suitable motor or gearbox for your product.